Saarbrücken, 08 May 2025 - Artificial intelligence (AI) is only as good as the data it is developed and tested on—especially in health research, where it is crucial to understand how well a model performs under challenging conditions. To better assess the performance of AI models, researchers at the Helmholtz Institute for Pharmaceutical Research Saarland (HIPS), together with partners at Friedrich-Alexander University Erlangen-Nürnberg (FAU), have developed a new tool called DataSAIL. Offering an innovative approach to data splitting, DataSAIL sets new standards in a critical step of AI development. Their findings were recently published in the journal Nature Communications.

Developing and validating AI models requires large datasets, which are typically split into two parts: training data to teach the model and test data to evaluate its performance. For test results to be meaningful, the test data must reflect realistic use cases. If the test data is too similar to the training data, the model may appear more effective than it truly is. On the other hand, if the test data is too different, the model might underperform—even if it's well-suited for its intended application. The challenge, therefore, lies in making smart, purposeful data splits.

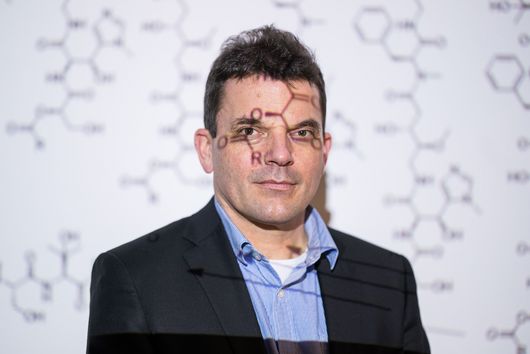

In a new study, Prof Olga Kalinina, head of the Drug Bioinformatics group at HIPS, her PhD student Roman Joeres, and Prof David Blumenthal from FAU demonstrate that creating maximally diverse test data is a mathematically complex problem. Nonetheless, they successfully developed DataSAIL—a software tool that formulates data splitting as an optimization problem and solves it efficiently. This approach allows users to generate test data that span varying levels of difficulty, from simple to highly challenging.

DataSAIL sets new benchmarks in several key areas. It is the first tool to apply this data-driven splitting strategy to virtually any data type—not just biological data, as has typically been the case. It can also automatically handle interaction data, where relationships between two types—such as drugs and target proteins—must be considered. DataSAIL ensures that similarities on both sides are factored into the split appropriately. Additionally, the tool allows for balanced distribution of key attributes—for instance, maintaining similar ratios of male and female data points in both the training and test sets. This is vital to avoid AI models unintentionally performing better for one group than another.

In benchmark tests, the researchers showed that DataSAIL generates more challenging—and thus more realistic—data splits compared to previous methods. This leads to more robust evaluations of a model's performance in real-world conditions. “With DataSAIL, we’ve developed a tool that for the first time allows the targeted selection of test data to reveal the limits of a model,” says Joeres. “Only when we understand where a model breaks down can we truly improve it.” Kalinina emphasizes the importance of rigorous testing for real-world AI applications: “Reliable AI models don’t come just from better training methods—they also require realistic testing scenarios. DataSAIL provides a crucial foundation for this, not only in bioinformatics but across many AI application domains.” Looking ahead, the team plans to further develop DataSAIL—making it faster and even more adaptable to a wide range of use cases.

Original Publication:

Joeres, R., Blumenthal, D.B. & Kalinina, O.V. Data splitting to avoid information leakage with DataSAIL. Nat Commun 16, 3337 (2025). DOI: 10.1038/s41467-025-58606-8